Per inference customization

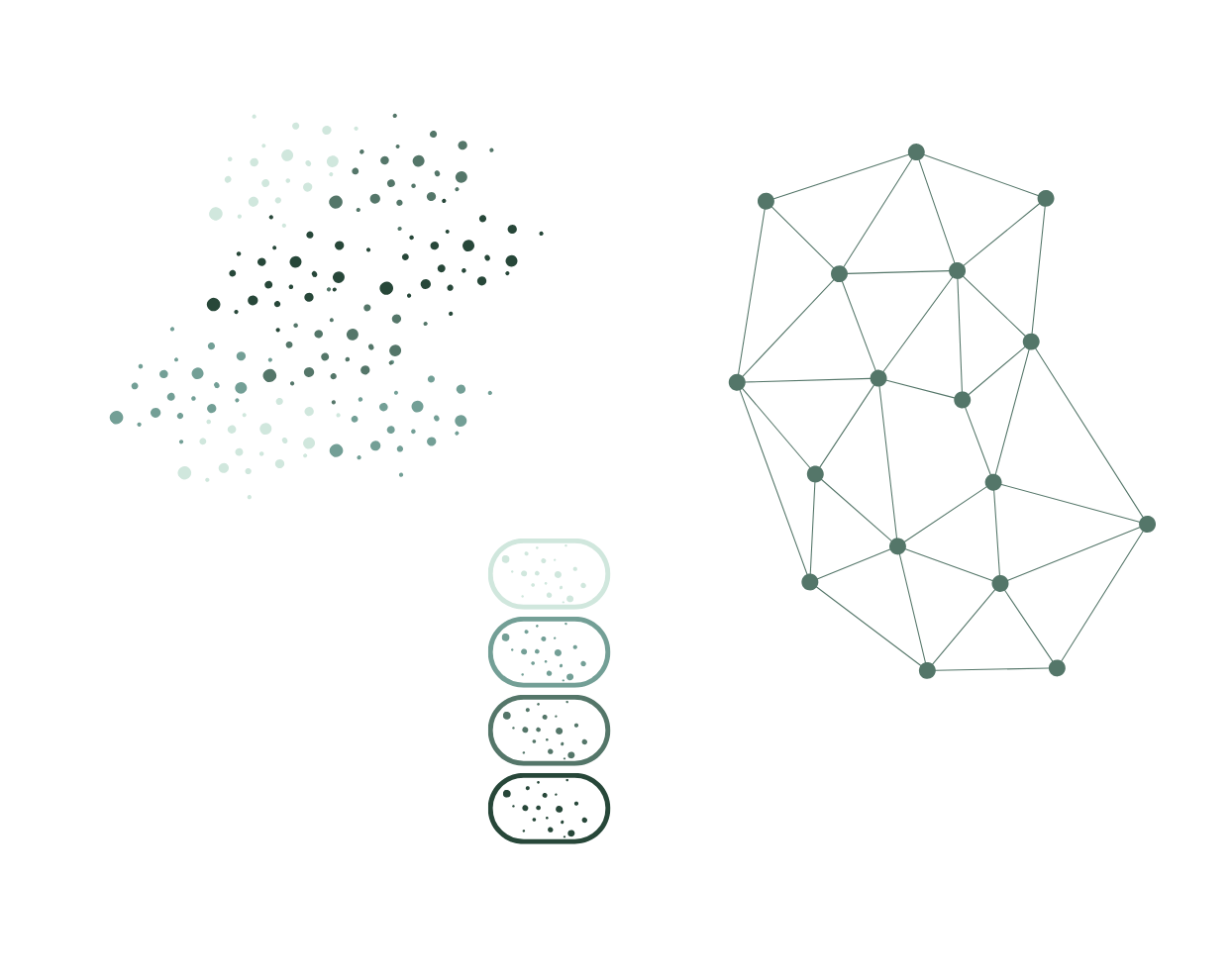

Models built by memorizing distributions of training data maximize their accuracy around the center of gravity of the dataset, compromising performance for outliers resulting in a “tyranny of the average.” With Orca’s active-learning memories, each inference pass can leverage unique memorysets each tailored to specific use cases and/or portions of a larger data distribution.

Customized accuracy

Orca allows you to blend standard, global facts with specifics about unique users, geographies, or situations. While these nuanced differences typically get lost or overfit during the training process, Orca can create forked experiences immediately and continue to customize an AI’s outputs to a specific population with ongoing usage and feedback. One example is improving false positives in threat detection by blending threat intelligence with posture specific to each customer - global memorysets for threat intel are combined with customer specific posture memorysets at each inference to optimize accuracy.

Orca’s shift from “customize with many models” to “customize within one model” expands accuracy and efficiency frontiers to overcome “tyranny of the average” and model proliferation challenges.

One model for many use cases

By swapping in a new memoryset for each inference, Orca’s system avoids the operational headaches that come from maintaining multiple, highly similar versions of a model. Instead of maintaining many pairs of models and training datasets, with Orca one model covers many use cases by choosing from a library of memorysets corresponding to different use cases. One example is personalizing a model for health care analytics with medical and environmental data specific to each individual user with a single model supported by memory datasets for each use.

Talk to Orca

Speak to our engineering team to learn how we can help you unlock high performance agentic AI / LLM evaluation, real-time adaptive ML, and accelerated AI operations.