Model consolidation & ML/AI Ops automation

Managing numerous models creates bandwidth-sapping ML/AI Ops processes for highly-skilled AI/ML engineers - retraining loops, data pipelines, deployments, etc. These tasks are critical for production systems, but they are often time-consuming and distract from building new capabilities and systems. Often significant capacity and velocity issues result from an “every use case needs its own model” approach, a method often employed to address the “tyranny of the average” difficulty of getting good accuracy across a broad range of data and use cases in a single model. In addition, manual processes across the build, deploy, adapt life cycle delay time to impact and erode ROI.

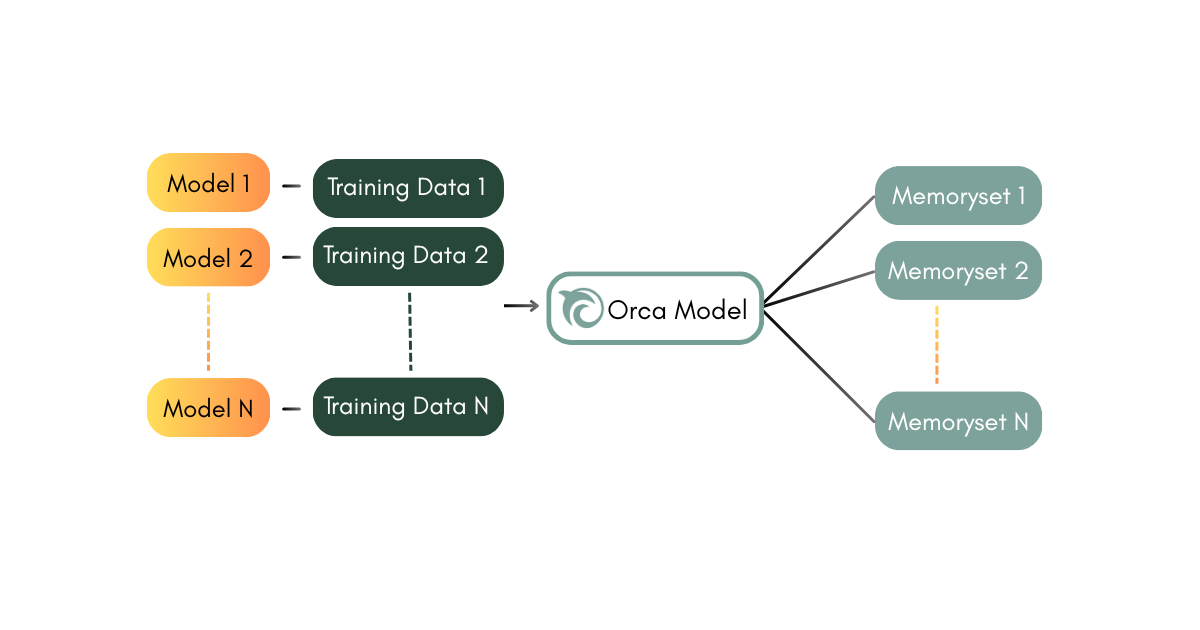

Orca enables a more efficient approach - small numbers of models handle large numbers of use cases by managing memory datasets instead of model parameters and clusters of “almost the same but not” models. Further - automation unlocked by transparent reasoning, updatable memorysets, and online learning enables step-function improvements in engineering agility and efficiency.

Consolidated Models

At each inference, an Orca model can select from a library of memorysets corresponding to different use cases or portions of a larger dataset. This enables a single model to cover large numbers of use cases without the operational costs of model sprawl. One example is personalizing retail recommendations specifically curated for each user and their preferences as opposed to generic recommendations for the entire user base - a single Orca model can attach to a specific user memoryset at each inference.

.png)

"CI/CD like" cycle times for ML/AI Ops

With continuous updates of the memory datasets that steer Orca models, batch retraining workflows are eliminated in favor of continuous updates, online learning adaptation, and as-needed human approval and edit. Transparent instrumentation of deterministic model reasoning and bulk acceptance of data editing recommendations unlock multiple automation workflows which reduce cycle times.

Orca’s memory-controlled deep learning system opens new operational models similar to modern software engineering practice, which feature one algorithm leveraging many datasets for many use cases, continuous updates and deployment, and automated online instrumentation & actionable insight.

Talk to Orca

Speak to our engineering team to learn how we can help you unlock high performance agentic AI / LLM evaluation, real-time adaptive ML, and accelerated AI operations.