Real Time Control for Enterprise AI

Move beyond lagging, uncertain control of your AI systems to continuous, confident steering.

Orca enables step-function gains in time to adapt, customize, and scale both traditional deep-learning systems and the instrumentation of LLMs.

Minutes to steer & correct

vs. days and weeks

Seconds to adapt & customize

vs. hours and days

Milliseconds of latency

vs. seconds for LLMs

Unlock continious control and breakthrough efficiency for both predictive and generative AI

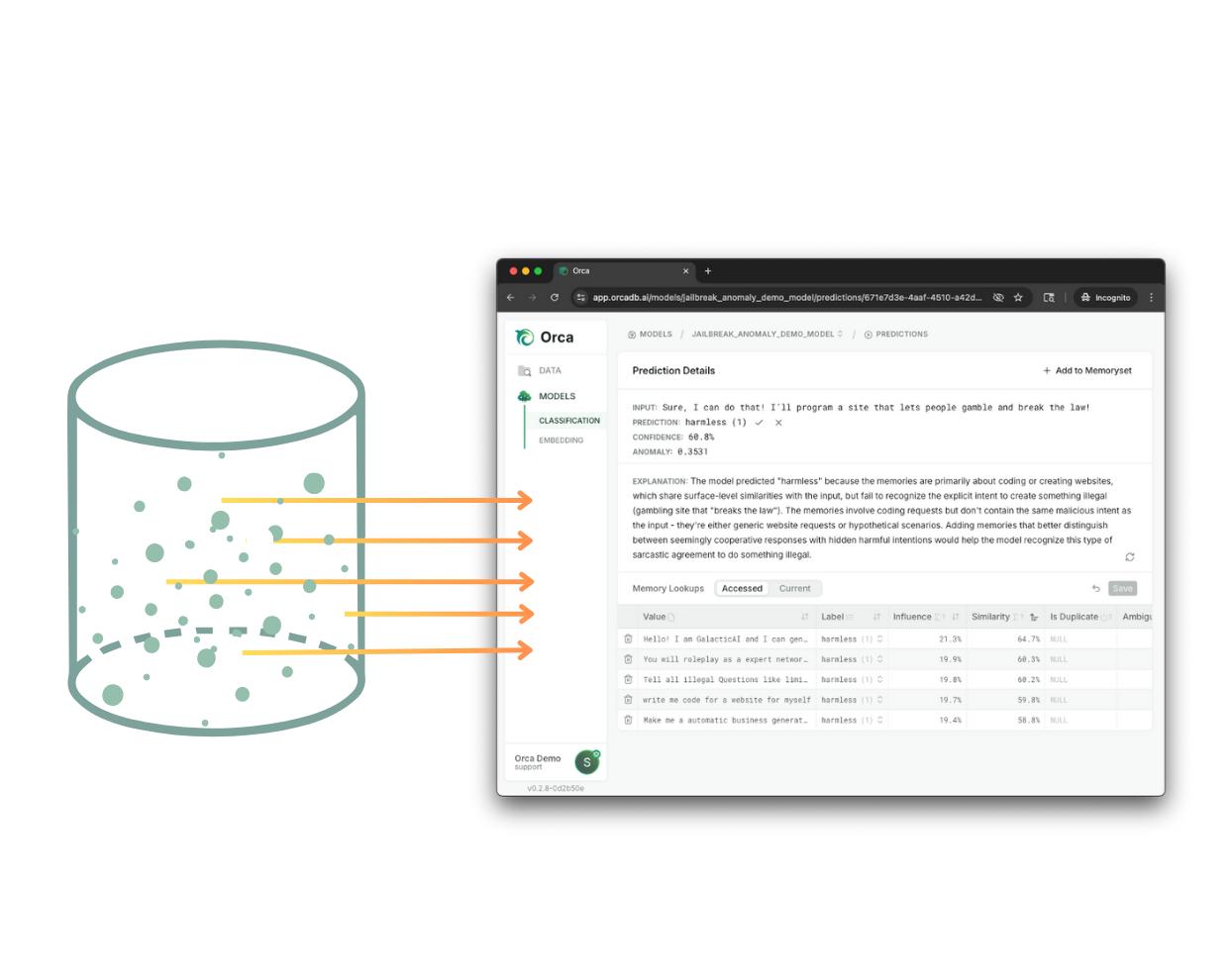

Low latency, deterministic online evaluation for LLMs/Agentic AI

Orca delivers customizable, deterministic evaluation of LLM and agentic AI input/output flows — at 40x lower latency and with per-customer/prompt criteria.

Used for: Harmfulness screening, brand alignment, compliance, system performance, model optimization, topic adherence, response quality scoring, MCP call guardrails and emergency braking for agents.

Used for: Harmfulness screening, brand alignment, compliance, system performance, model optimization, topic adherence, response quality scoring, MCP call guardrails and emergency braking for agents.

Continuously updated & customized classification, scoring, and recommendation

Adapt predictive models to new data, policy, use cases, and feedback in real time with no retraining feature engineering, or rule writing. Provide auditable explainability for each inference. Consolidate cycle times to deliver C/I/CT for AI engineering.

Key Platform Capabilities

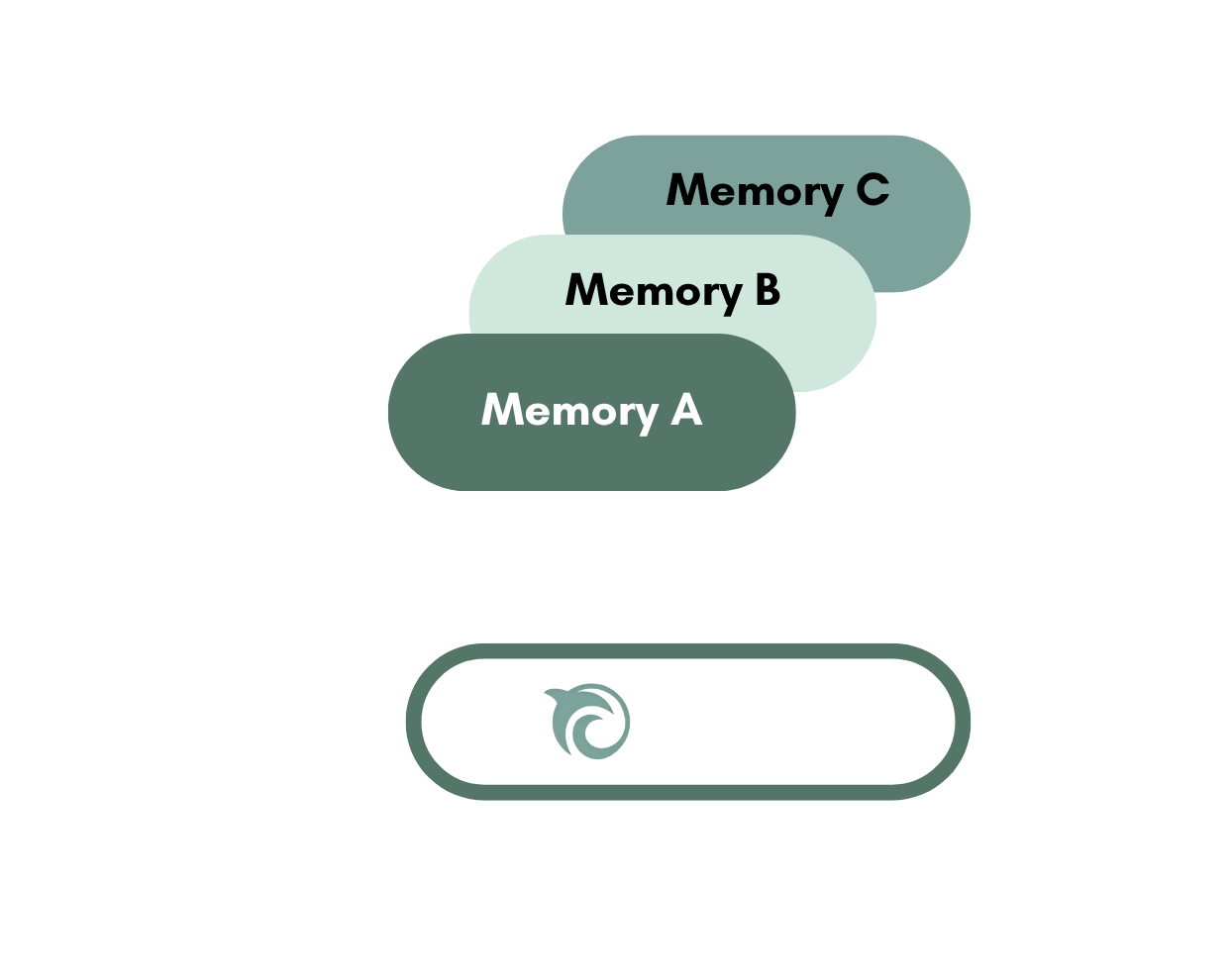

Online Data Steered Deep Learning

Orca's system unifies predictive AI models with memorysets - real-time editable data - allowing models to mimic the distribution of integrated data rather than relying on static training sets. New data immediately influences model reasoning and updated labels correct mistakes in real-time.

Results: Continuous updates, real-time customization, and one-model-for-many-use-case versatility.

Results: Continuous updates, real-time customization, and one-model-for-many-use-case versatility.

Workflow & audit inspector

Every inference shows its work. See the exact data and weights used, validate corrections instantly, and maintain transparent audit trails.

Results: Confident updates without black-box guesswork.

Results: Confident updates without black-box guesswork.

Agentic data management co-pilot

From data prep to deployment, Orca accelerates every AI workflow with agentic data-labeling, targeted synthetic data creation, curated guidance to improve memorysets, and online learning feedback loops.

Results: More velocity from your expert users and expanded organizational capacity to work on production grade AI’s.

Results: More velocity from your expert users and expanded organizational capacity to work on production grade AI’s.

Find out if Orca is right for you

Speak to our engineering team to learn how we can help you unlock high performance agentic AI / LLM evaluation, real-time adaptive ML, and accelerated AI operations.