Customer support chatbots

Customer support chatbots must stay within strict topic boundaries, respond with brand-safe and compliant language, and accurately route users to the right workflow. Most teams rely on either no guardrails or slow, manual guardrails that can’t keep up with new product updates, new policies, or newly prohibited topics.

Traditional LLM-based guardrails introduce latency, are expensive to tune, and are inconsistent under load. Rule-based systems become brittle and hard to maintain.

Orca provides a controllable, low-latency evaluation layer designed for production-grade support chatbots.

The problem with current chatbot guardrails

Slow or manual updates

Adding a new prohibited topic, adjusting routing logic, or refining accuracy checks means updating prompts, policies, or training data. This often takes hours to days, especially in regulated or high-risk support flows

Latency from LLM-as-judge guardrails

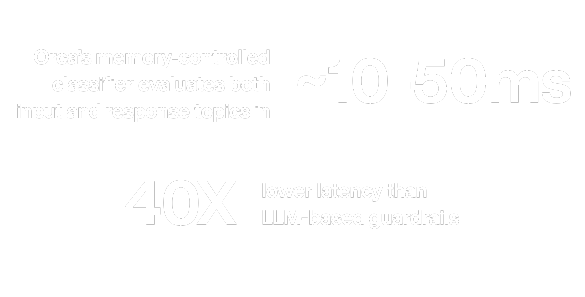

Evaluating every user query and every bot response with an LLM adds hundreds of milliseconds to seconds of latency. Inline evaluation becomes impractical at scale

Lack of determinism

LLMs produce variable outputs - problematic for compliance, sensitive domains, or strict brand voice enforcement

Hard to customize per use case

Each product line, region, customer tier, or support workflow may need different topic boundaries and accuracy checks. LLM prompts or rule engines don’t scale without becoming unmanageable

System prompts only get you so far

LLMs seem remarkably easy to steer and control with the System prompt, but the reality is that that adherence to guardrails embedded in the System prompt is far from perfect, and insufficient for many use cases. A good system prompt, incl. Instructions on what the model is and isn’t allowed to do, is essential for robust LLM deployments, but it is not enough to ensure high reliability

Orca's solution:

Real-time, deterministic chatbot evaluation

1. Ultra-low-latency topic adherence checks

2. Dynamic, per-interaction criteria

Each chatbot turn can load a different memoryset:

- per product or feature

- per language or region

- per customer segment

- per regulatory requirement

This avoids maintaining dozens of separate models or rulesets.

3. Instant updates with no retraining

If a new topic becomes prohibited or a new workflow is launched, teams simply edit the memoryset. The model updates immediately, eliminating retraining cycles.

4. Deterministic scoring & explainability

Each evaluation is grounded in explicit referenced memories, giving stable, reproducible results and transparent audit trails for compliance review.

5. Jailbreak protections

Detect unwanted and unsafe interactions across security and business criteria, so you can block jailbreaking attempts that harm your organization and brand.

Evaluations you can run inline

- Topic adherence (allowed vs. disallowed topics)

- Brand / voice compliance

- Accuracy checks: does the response match known product info?

- Workflow routing (billing vs. technical issue vs. account management)

- Escalation flags (uncertainty, sensitive topics, legal/compliance triggers)

- Hallucination detection (response contradicts known knowledge)

All of these criteria can be applied per message, with different memorysets swapped in real time depending on context.

Example workflow

1. User asks a question

2. Orca evaluates input topic, intent, and whether deeper review is required

3. LLM generates a draft response

4. Orca evaluates the response for accuracy, topic adherence, compliance, and brand guidance

5. If issues are detected, the system escalates to a human, regenerates with constrained instructions, or routes to a deterministic workflow

This runs inline without adding noticeable latency

Where this is a fit

- Support chatbots with strict safety/compliance requirements

- Enterprises with multiple product lines and region-specific rules

- Teams frustrated with slow-to-update manual guardrails

- Systems requiring determinism and traceability for audits

- Organizations needing low-latency evaluation at scale

Talk to Orca

Speak to our engineering team to learn how we can help you unlock high performance agentic AI / LLM evaluation, real-time adaptive ML, and accelerated AI operations.